Effective Enforceability of EU Competition Law Under Different AI Development Scenarios

A Framework for Legal Analysis

This post examines whether competition law can remain effective in prospective AI development scenarios by looking at six variables for AI development: capability of AI systems, speed of development, key inputs, technical architectures, number of actors, and the nature and relationship of these actors. For each of these, we analyse how different scenarios could impact effective enforceability. In some of these scenarios, EU competition law would remain a strong lever of control; in others it could be significantly weakened. We argue that despite challenges to regulators’ ability to detect and remedy breaches, in many future scenarios the effective enforceability of EU competition law remains strong. EU competition law can significantly influence AI development and help ensure that its future development is safe and beneficial. We hope that our scenario-based framework for analysing different possibilities for future development can assist with further work at this intersection.

Why EU Competition Law is a Key Lever

In a world being transformed by artificial intelligence (AI), EU competition law is likely to be a powerful tool that could both help or hinder other aims of AI governance. EU competition law has jurisdiction over foreign companies that are active in the EU, such as US Big Tech (indeed these companies have been the focus on EU competition law enforcement in recent years).

But EU competition law is also profoundly challenged by AI progress. The rapid and complex development of AI is already challenging effective enforceability – as well as reshaping our economies, politics and societies. ‘Effective enforceability’ is a term that we use to refer to how effective competition law is in achieving its objectives. Effective enforceability can depend on a wide variety of factors. For present purposes, we will focus on whether:

- the conduct in question falls within the jurisdictional scope of competition law (and is not e.g. protected by sovereign immunity rules);

- the law is written and applied by the courts in a way that is in line with the legislators’ intentions (and there are not lacunae or loopholes);

- regulators have the independence, resources and expertise to effectively detect and bring a successful infringement; and

- competition law can effectively remedy and sanction the breach in a way that addresses the harm.

The field of AI shares a goal of Artificial General Intelligence (AGI): “highly autonomous systems that outperform humans at most economically valuable work”. AI is a general-purpose technology like the steam engine or electricity, and if it continues its rapid progress its impacts could be as ‘transformative’ as the industrial revolution in terms of its impacts on the economy and society – hence the term ‘transformative AI’ (TAI).

Can EU competition law remain ‘effectively enforceable’ in future AI development scenarios, especially towards the development of TAI? There is little agreement amongst experts about AI development trajectories, so anticipatory governance needs to consider a range of scenarios. We map this along six development variables, as set out below.

Effective Enforceability of Competition Law across Six Variables

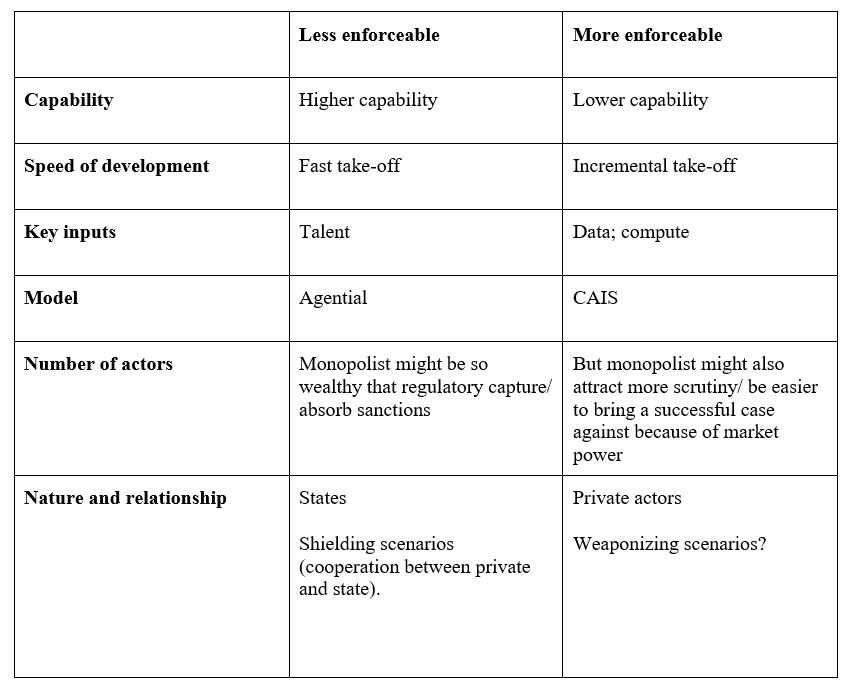

1. Capability. This variable refers to the state of technological capabilities: the tasks and ‘work’ that can be accomplished by an AI system or collection of systems.

Figure 1: AI Capability & Impact (including high-end milestones such as human-level machine intelligence and superintelligence)

Figure 1: AI Capability & Impact (including high-end milestones such as human-level machine intelligence and superintelligence)

All else being equal, competition law enforcement is more likely to be effective when AI has lower capability. From the point TAI is developed, there would likely be more scope for private actors to evade competition law investigation or detection by using their advanced AI capabilities – such as improved communications, surveillance or financial structure – to conceal its conduct, such as participation in a cartel or the extent of its market power in an abuse of dominance scenario. A high capability system may also be able to generate substantial wealth. On the one hand, this could lead to more antitrust scrutiny because the wealth increase could lead to a backlash and more regulatory attention on the actor. On the other hand, if the wealth is generated in a less perceptible way, it could lead to extreme regulatory capture and therefore reducing the willingness of regulators to bring a case.

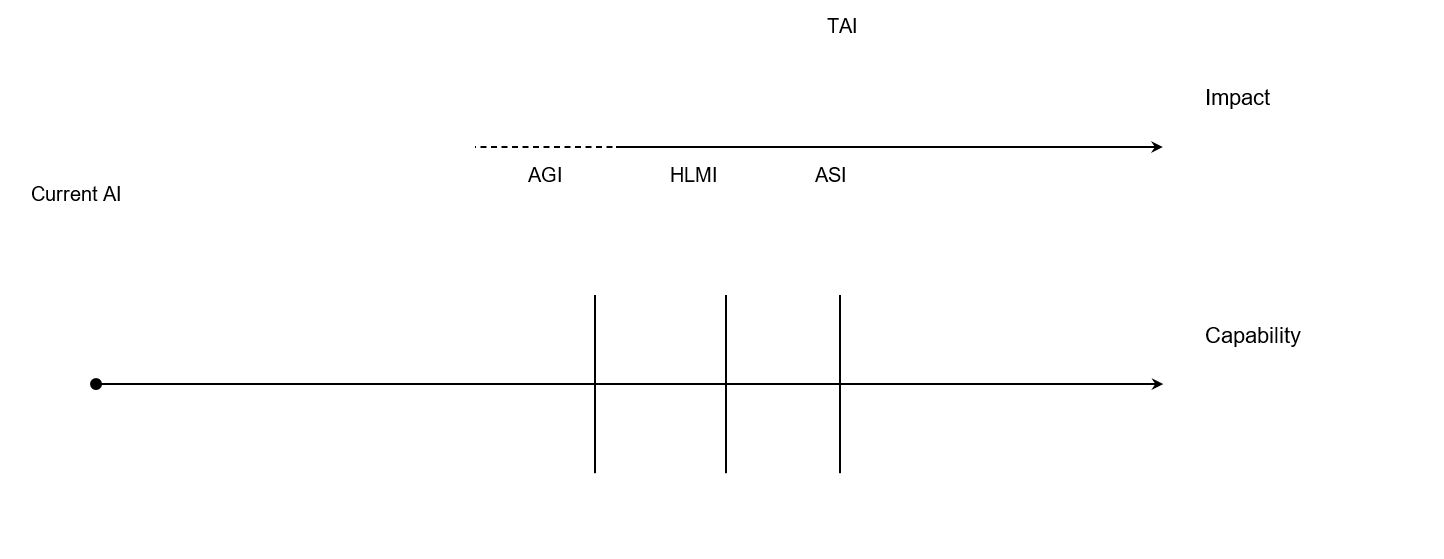

2. Speed of development. AI could be developed in a rapid and general way (‘rapid take-off’) or through more incremental, sequential and prosaic development, or anywhere on the spectrum between these two extremes. Progress in chess-playing was slow, but in language models has been fast. Speed could also vary throughout the development process.

Figure 2: Speed of AI development

Figure 2: Speed of AI development

Regulatory enforcement, and within that competition law, will likely be weaker the faster the speed of development. New technologies may breach the law in novel ways that should be caught by existing rules but instead fall through the cracks or give rise to lacunae in the law. The regulator may struggle to bring a case quickly enough to address the harm. A fine several years down the line may not be enough to restore competition because e.g. competitors have already been forced to exit the market. And the perpetrator firm has already made windfalls over a number of years to make the conduct worthwhile.

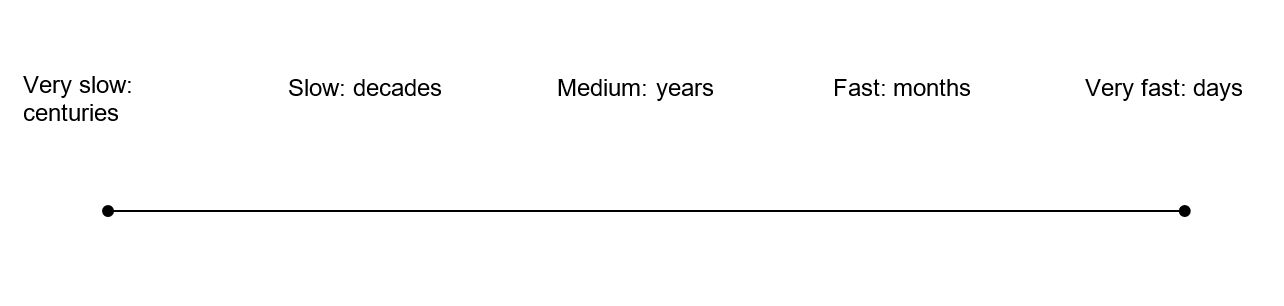

3- Key inputs into AI development. Three key inputs drive advances in AI: algorithmic innovation, computational resources (hardware or ‘compute’), and data. All three are important, yet we can conceive of one of these inputs being the most constrained and therefore a bottleneck.

Figure 3: Extent to which each major input is a constraint.

Figure 3: Extent to which each major input is a constraint.

The key input driving AI advancements could be relevant as part of the assessment of market power. An assessment of market power is particularly pertinent in an abuse of dominance or merger control scenario. The effective enforceability of competition law may depend on the type of key input that is the bottleneck. In comparison with data and talent, if compute is the bottleneck, and progress relies on large amounts of computing power, then competition law may be more relevant as it is likely to be easier to regulate relative to data or talent. This is because compute is more easily measured and quantified as part of any market power assessment. As a remedy, compute may also be more easily ‘transferred’ or distributed compared to talent (involves flight risk) or data (which faces data protection issues). ‘Structural’ separation of compute may also be easier (e.g. a divestment in a merger scenario to create a competitor).

4. Model of AI system. The technical-level model of a highly capable AI system could vary on a spectrum between at one end a singular agential model (such as a goal-directed autonomous RL agent) and on the other a more distributed, disaggregated ‘Comprehensive AI Services’ (CAIS) model.

Figure 4: the spectrum of AI model designs

Figure 4: the spectrum of AI model designs

A CAIS technical structure would be more effectively enforceable than a monolithic structure. First, a CAIS model should be easier to monitor. Under CAIS, each service interacts with other services via clearly defined channels of communication, so that the system is interpretable and transparent, even though each service may be opaque. Second, structural remedies (i.e. remedies that involve breaking up a company) seem more achievable for a CAIS model than a singular agent model. A CAIS company would be a vertically or horizontally integrated company, and each of the services is technically separable from the others, and could function as an independent company. A singular agent system is likely to be harder to separate – though several companies could have their own singular agents.

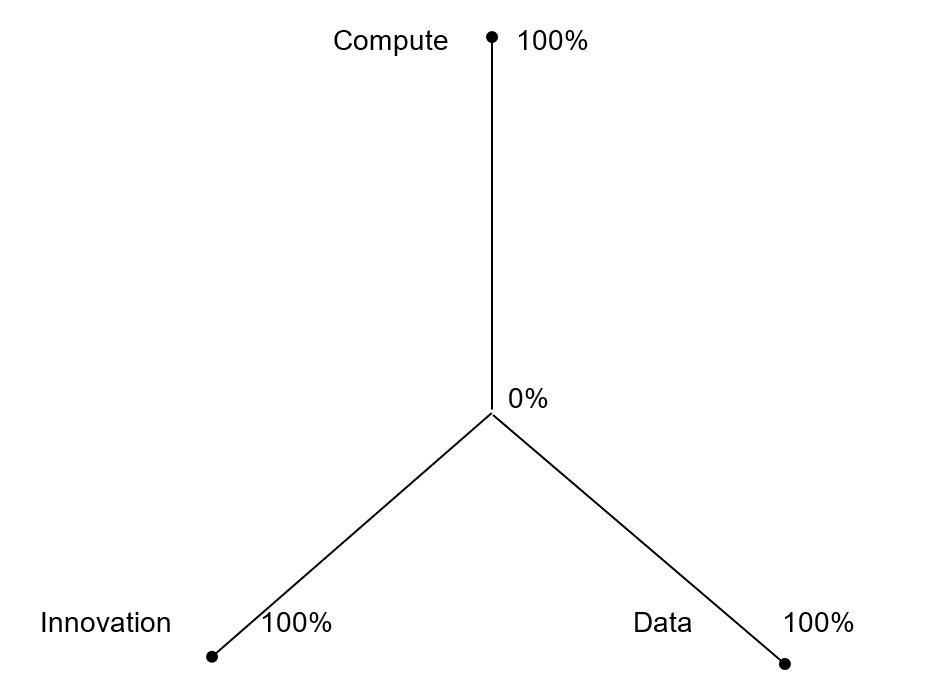

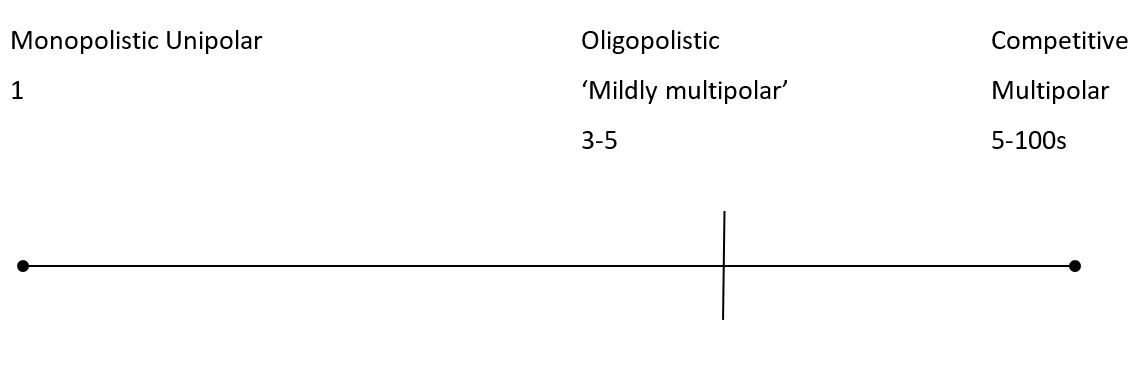

5. Number of actors. At the monopolistic or unipolar extreme, a single actor is the clear leading AI developer or deployer. At the contrasting extreme, there may be a multipolar situation, with multiple (in the tens or hundreds) actors developing and deploying AI with comparable levels of capability. In between, we could consider a ‘mildly multipolar’ or oligopolistic scenario, with a more defined group of 3-5 actors.

Figure 5: the spectrum of number of organisations deploying AI

Figure 5: the spectrum of number of organisations deploying AI

In a monopolistic scenario, effective enforceability may be higher relative to a multipolar scenario, as the market will be less competitive. If the monopolist is abusing its dominance, antitrust authorities should find it easier to detect and establish that the monopolist has market power for the purposes of bringing a successful antitrust claim. Acquisitions by that monopolist may also be subject to more stringent merger control assessment compared to a multipolar scenario. However, one potential outcome of a monopolistic scenario is concentrated control of the technology, wealth and power – with potentially negative implications for enforceability.

6. Nature of actor. The nature of the actors is important when looking at the enforceability of competition law. All other variables being equal, competition law will be applicable to a private TAI actor from a jurisdictional perspective because the actor is likely to constitute an ‘undertaking’ offering goods and services. But an actor that is a state or linked to a state may not be subject to EU competition law if it can rely on various defences based on its sovereign status. These defences mean the actor could fall outside of the jurisdiction of EU competition law. The lines between state and company are blurred because acts that are commercial in nature do not benefit from state immunity. Overall, though, it will be more difficult to apply competition law on the private actor that is or acts like a state.

Conclusion

The future of AI development is highly uncertain. We sought to reduce that uncertainty by using a scenario-based framework to examine how different variables affect the effective enforceability of competition law. We summarise these below. Despite challenges, we find that effective enforceability remains strong in many scenarios, and therefore that competition law will likely remain a key shaper of future AI development. The AI governance and competition law fields must work together to help ensure future AI development is safe and beneficial to consumers and society, and we hope these findings will be useful to future work at this intersection.