We Don’t Need No Education?

Teaching Rules to Large Language Models through Hybrid Speech Governance

At the beginning of this week, a new start-up called Vectara published figures on how often chatbots veer from the truth, i.e. “hallucinate”. The company’s research estimates chatbots invent information at least 3 percent of the time and sometimes as high as 27 percent. Given the use of such systems in nearly all domains, we might want such systems to follow more stringent rules of accuracy. And those truth-related rules are not the only rules for AI systems that warrant societal scrutiny. How those systems are trained will be crucial. In this blog post, we argue that a new perspective is key to tackle this challenge: “Hybrid Speech Governance”.

LLMs and Rules

An LLM is a specialized type of artificial intelligence (AI) that has been trained on vast amounts of text to “understand” existing content and generate original content. Even if it is criticised as anthropomorphising, the terms “training” and “learning” have become established to describe how their performance is improved. Chatbots such as ChatGPT or Google Bard are based on LLMs (for technical details, see e.g. here).

One can formulate many normative expectations towards them. These expectations can be formulated in rules. The rules conceivable are diverse, such as:

- “What takes the form of a statement should be true”;

- “What takes the form of a request should not recommend the users hurting themselves”;

- “The output should not create incentives to build a para-social relationship with the machine”; or

- “The outputs should not reinforce stereotypes”.

The list goes on and on. This variety of rules and goals accounts for multi-agent systems being a core area of research of contemporary artificial intelligence. A multi-agent LLM consists of multiple decision-making agents that interact in a shared environment to achieve common or conflicting goals. As lawyers, we are interested in rules that protect the interests of individuals or groups, organisations, or society as a whole. The question thus arises as to who should formulate such rules and what is the relationship between the private rules set by companies and state laws.

The issues discussed here can be seen as part of the alignment problem discussed in the sociology of technology. It concerns the broader question of how the values inscribed in AI technology can be paralleled with human values. In view of their potentially serious real-world effects, regulators around the world are increasingly proclaiming with regard to LLMs: “They Do Need Some Education” – regulators have their own rules of public interest, which they want to convey to such systems.

Analysing the Rule-Making for LLMs

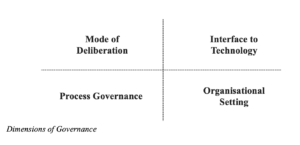

The fact that the output of LLM systems can have an impact on individuals, groups, organisations and society and the respective rules to mitigate them is often negotiated under the heading of “Trust & Safety” in the industry. The rules that determine the results of LLMs are at the center of the regulatory debate, because given the implications, many argue that this area should not only be governed by the industry. We propose sorting the development and implementation of those rules according to the following governance dimensions:

The Mode of Deliberation includes the question of who issues the rules for LLMs in the first place. Is it just the developers of the services, such as the set of 21 rules in the case of Google DeepMind’s not yet released chatbot “Sparrow”, are there state-set rules that have gone through a parliamentary process or do rules originate from multi-stakeholder arrangements? The Interface to Technology dimension describes the mechanisms by which systems learn to follow the previously defined rules. Training of the rules can be done by traditional human reinforced feedback or even by another AI systems that trains the respective LLM. An example for the latter constellation is the multi-stage learning process that is known as “Constitutional AI”. It involves LLMs being trained by AI systems based on fundamental values defined by the company – the “Constitution”. Process Governance addresses the question of how the processes of rule creation and implementation are structured: Does this happen solely along the lines of entrepreneurial autonomy, are there any industry standards or even government rules? Finally, rulemaking can be analyzed by looking at the Organisational Setting. This includes the structure of actors involved in rulemaking and implementation, such as an oversight board, a small agency, a regulator, or even a worldwide council.

All those elements are of course interrelated. A clear distinction will not always be possible but should at least be sought in the interests of a rational debate on the regulatory structures in which LLMs operate.

Hybrid Speech Governance: From Social Media to LLMs

Within these regulatory structures of LLMs, there is an overlap between business-inspired private rules on the one hand and increasing public requirements on the other. As a result, the rules by which the outcomes of LLMs are designed increasingly become a hybrid of private and public rules. This highlights the relevance of the process dimension outlined above, i.e. how the various applicable rules interact and interrelate.

We argue in favor of transferring a specific regulatory perspective from the field of social media regulation to the subject matter of LLMs: “Hybrid Speech Governance”.1)

We introduce this perspective in the area of social media regulation as a governance perspective, according to which we want to overcome the dichotomous view between sovereign and private rules in order to focus instead on the overlaps and interdependencies of these sets of rules. This is an analytical perspective, for other legal doctrinal purposes it makes still perfect sense to focus primarily on the private/public distinction.

On social media platforms, it can be observed that the regulatory structures for the communicative behaviour of users are hybridising. This is since, from a legal perspective, platform operators voluntarily follow sovereign standards, and that sovereign rules are increasingly influencing private ordering. We argue that although this regulatory approach is similar to the familiar category of co-regulation, it has a special feature: While co-regulation creates an upstream sovereign legal framework within which private rules can be enacted, sovereign and private communication rules are inextricably linked in the area of social media. From a governance perspective, welfare-oriented goals and entrepreneurial goals are therefore not subject to a layered hierarchy but are pursued at the same level. In short: Hybrid Speech Governance refers to the overlap, interdependence, and interrelation of private and public communication rules which need to be understood for all relevant actors to make informed decisions, including lawmakers.

If the providers of LLMs are increasingly voluntarily orientating their rules towards public standards and at the same time are subject to ever tighter regulation (that also aims at influencing their own rulemaking), a similar hybrid field opens up here. Such hybrid regulatory structures challenge legal systems that operate under the premise of a dichotomy between private and public ordering: What is the basis for validity of rules within hybrid regulatory structures? To what extent do requirements that have traditionally only applied to state actors, in particular the rule of law and fundamental rights obligations, take effect in hybrid regulatory structures?

EU Legislation on LLMs: Setting Sail for Hybridity

The EU made initial attempts to address these challenges of hybrid governance: Art. 5 of the Terrorist Content Online Regulation and Art. 14 of the Digital Services Act (DSA) transfer originally state-centered concepts to the private ordering of digital companies using the leverage of general terms and conditions.2) The DSA regulates how platforms govern speech by private rules and orders them to observe fundamental rights – a novel approach embracing the hybridity of the governance structure. This is a form of regulation, the implications of which must be examined in detail by case law and doctrine,3) but such a modern approach could turn out to be future-proof. The EU should now synchronise its regulation of LLMs with the hybridity of such regulatory structures in the sense of a coherent digital strategy.

First, it cannot be excluded that LLMs are already subject to certain content moderation rules of the DSA. It is certainly argued with good reason that LLMs are not “hosting services” within the meaning of Art. 3(g)(iii) DSA; the AI-generated output is prima facie not provided by other users, but presented by companies as their own content. Considering, however, that LLMs often display output generated based on information provided by other users, it seems worthwhile to at least examine more closely whether such services may be classified as search engines (Art. 3(j) DSA). In any case, such LLMs that are built into the platform architecture might fall within the scope of the DSA. In this respect, the hybrid approach of the DSA could at least partly apply to LLMs in the enforcement of general terms and conditions – admittedly without the EU lawmakers originally having this specific application in mind.

Indeed, at the time of the Commission’s proposal for an AI Act in April 2021, the debate on LLMs was not yet very lively. The original proposal did not focus sufficiently on LLMs, not to say: “ChatGPT broke the EU plan to regulate AI”. The current trilogue negotiations (see the respective 4-column document here) now offer the opportunity to properly reflect the hybridity of the regulatory structures as to LLMs. In this regard, the European Parliament’s ideas are particularly promising. Not only does the Parliament generally assume that AI operators should generally make their best efforts to respect the Charter as well as the values on which the Union is founded (Art. 4a(1) EP Version). In particular, Parliament covers LLMs as a specific form of a foundation model (Art. 3(1c) EP Version) in its proposed Art. 28b. Accordingly, before launching an LLM based service, a provider must “demonstrate through appropriate design, testing and analysis the identification, the reduction and mitigation of reasonably foreseeable risks to health, safety, fundamental rights, the environment and democracy and the rule of law prior and throughout development with appropriate methods such as with the involvement of independent experts, as well as the documentation of remaining non-mitigable risks after development” (Art. 28b(2)(a) EP Version). Additionally, providers of LLMs must “train, and where applicable, design and develop the foundation model in such a way as to ensure adequate safeguards against the generation of content in breach of Union law in line with the generally-acknowledged state of the art, and without prejudice to fundamental rights, including the freedom of expression” (Art. 28b(4)(b) EP Version.). Irrespective of the question of whether it is appropriate in terms of regulatory policy to build on specific IT concepts such as “foundation models”, it could make sense to adopt the hybrid regulatory concept of the aforementioned EU platform laws even more explicitly for the AI Act.

The European Parliament thus reveals an understanding of the hybrid nature of the regulatory structures that determine the outcome of LLMs. The suggested amendments have the potential to weave rules, that are traditionally solely directed at state actors, into the levels of Mode of Deliberation, Interface to Technology and Process Governance. Detailed proposals for improving the proposed EU rules of LLMs must be made elsewhere. However, one thing is clear: The course of the trialogue negotiations should cohesively follow an approach of hybrid governance. There is now a legislative chance to ensure that LLMs are taught in the general interest while respecting the business objectives of companies. In times where the richest person in the world is incorporating his eccentric ideas not only into the content moderation system of a huge social media platform, but arguably into an LLM, too, it is worth taking a holistic view at the horizontal effects of originally state-centred concepts. In this regard, the AI Act could turn out to be “Another Brick in the Wall” of adequate Hybrid (Speech) Governance in the EU’s digital strategy.

References

| ↑1 | Wolfgang Schulz and Christian Ollig, ‘Hybrid Speech Governance – New Approaches to Govern Social Media Platforms under the Digital Services Act’, Journal of Intellectual Property, Information Technology and E-Commerce Law (JIPITEC) 14(4), forthcoming. |

|---|---|

| ↑2 | Generally on this regulatory technique in the field of platform regulation, Tobias Mast, ‘AGB-Recht als Regulierungsrecht’ JuristenZeitung (JZ), 78 (2023), 287. |

| ↑3 | First thoughts on such regulatory technique Tobias Mast and Christian Ollig, ‘The Lazy Legislature: Incorporating and Horizontalising of the Charter of Fundamental Rights through Secondary Union Law‘, European Constitutional Law Review (EuConst) forthcoming. |