Frontex and ‘Algorithmic Discretion’ (Part I)

The ETIAS Screening Rules and the Principle of Legality

This contribution, presented in two parts, offers a predictive glimpse into future rule of law challenges due to the European Border and Coast Guard Agency’s (Frontex) primary responsibility for the automated processing and screening rules of the soon-to-be-operational European Travel Information and Authorisation System (ETIAS) at the EU’s external borders. This contribution reflects upon these challenges in light of the recent Court of Justice of the European Union (CJEU) judgement Ligue des droits humains on the automated processing of passenger name record (PNR) data on the basis of the PNR Directive. In a similar way to ETIAS, the PNR Directive “seeks to introduce a surveillance regime that is continuous, untargeted and systematic” (Ligue des droits humains, par. 111) and involves automated risk assessment of personal data followed by manual processing of resulting ‘hits’.

This contribution is situated in the context of the increasing automation of EU external border control and aims, in part, to argue that the regulatory design of ETIAS raises issues in relation to the rule of law principles of legality (Part I) and of effective judicial protection (Part II). In Part I on legality, I argue that the ETIAS screening rules algorithm illustrates how automation can lead to what I suggest is a new form of arbitrariness – which I refer to as ‘algorithmic discretion’. This can be defined as a situation where the exercise of power and discretion and their limitations are not sufficiently specified at the legislative level but are delegated to an algorithm instead.

ETIAS Decision-making in a Nutshell

The soon-to-be operational ETIAS is a newcomer in the family of large-scale IT systems used in the field of EU migration control. The system will act as an automated, data-driven risk filter that visa-exempt third-country nationals must pass to enter the EU. Thus, they are required to provide their personal data to be mined via this system. Its purpose is to make pre-arrival predictive risk assessments of whether visa-exempt third-country nationals (previously exempted from any form of pre-arrival risk assessment) pose a security, ‘illegal immigration’ or high epidemic risk, if admitted to the EU (Articles 1(1), 2, 4 ETIAS Regulation).

Under the Regulation, visa-exempt third-country nationals must submit, prior to their arrival at an EU external border, personal data to the ETIAS on inter alia their sex, date, place and country of birth, nationality, city and country of residence, level of education and current occupation. Automated processing of their application to enter the EU will be based on this data. The exemplified data categories are those used by the screening rules algorithm discussed below (Articles 17(2), 20(5) ETIAS Regulation). I define an ‘automated’ system as a technical system that can act independently to perform a function with no or fairly minimal human intervention.

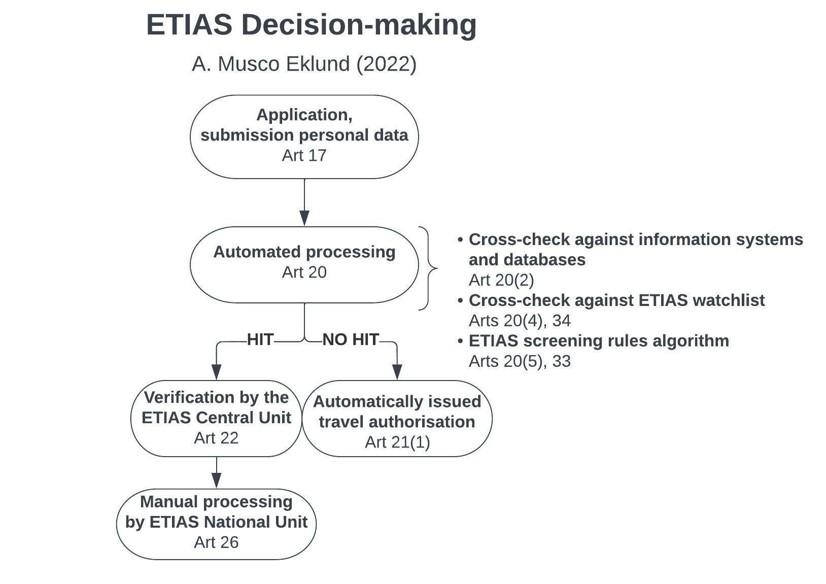

In the case of ETIAS, if its automated processing does not report any ‘hit’, the application for entry will be automatically issued. In contrast, where the automated processing reports a ‘hit’ the application will be manually processed (meaning processed by a human) in two steps. This process is briefly summarised below:

1) Verification by the ETIAS Central Unit, established within Frontex.

2) Manual processing by the staff at one of the ETIAS National Units established within each Member State, which are responsible for making the final decision to issue the requested authorisation to enter the EU or, alternatively, to refuse the application.

It follows from the above that ETIAS is fully automated when it comes to positive decisions, and a semi-automated decision support system in cases of a ‘hit’.

Figure 1. Illustration of the ETIAS decision-making process based on the ETIAS Regulation.

The ETIAS Screening Rules Algorithm and Frontex

The automated processing consists of three automated functions: an automated cross-check against 1) Europol data, Interpol databases, and relevant EU information systems, 2) a new ‘ETIAS watchlist’ and 3) an automated predictive risk assessment by the ETIAS screening rules algorithm (Art 20(2, 4, 5) ETIAS Regulation). This contribution discusses rule of law challenges due to automation and will therefore focus on the ETIAS screening rules algorithm, which relies on a considerably higher level of automation than the other two automated functions.

The ETIAS Central Unit within Frontex will be responsible for the automated processing, the ETIAS screening rules and for “defining, establishing, assessing ex ante, implementing, evaluating ex post, revising and deleting” the ‘specific risk indicators’ of the screening rules (Articles 7(2)(c), 75(1)(b, c) ETIAS Regulation). However, the ETIAS Regulation only provides a general normative basis for using the screening rules algorithm and provides very few meaningful specifications on how the components of the screening will work in practice. The main source of information on how the screening rules algorithm is intended to work is Article 33. The elements of the algorithm as described in Article 33 are interpreted and illustrated below in Figure 2.

Figure 2. Illustration of the ETIAS screening rules algorithm based on Article 33 of the ETIAS Regulation.

The procedure is as follows. Firstly, by means of a delegated and implementing act, the Commission will define and specify the risks related to security, ‘illegal immigration’ and high epidemic risk based on listed statistics and information on, inter alia, abnormal rates of overstaying and refusals of entry. This risk specification is referred to as the ‘specific risks’, on the basis of which Frontex will establish ‘specific risk indicators’. These specific risk indicators will serve as one of the main components used by the screening rules algorithm. The screening rules algorithm will enable profiling (according to Article 4(4) GDPR) through the comparison of the specific risk indicators established by Frontex on one side, and the personal data submitted in the individual application on the other. If the algorithm detects a correlation between the specific risk indicators and the personal data, it will report a ‘hit’, which means the application must be manually processed (Articles 20(5), 21(2) ETIAS Regulation).

How ETIAS will function in practice depends on factors outside the ETIAS Regulation, since the Regulation merely requires that the specific risk indicators are 1) based on the Commission’s risk specification, 2) consist of a combination of certain data categories and 3) are targeted, proportionate and non-discriminatory (Article 33(4–5) ETIAS Regulation). These requirements leave a wide discretion to Frontex, as they also do not specify how personal traits and data will be weighed against each other in the risk assessment. This wide degree of discretion is problematic, especially since the definition of the specific risk indicators has a significant impact on how the screening rules algorithm will operate in practice. As held by the CJEU in Ligue des droits humains (par. 103), the extent of interference that automated analyses entail in respect of the rights enshrined in Articles 7 and 8 of the EU Charter of Fundamental Rights “essentially depends on the predetermined models and criteria”. These, in the case of ETIAS, are Frontex’s specific risk indicators.

One potential transparency issue is that, in contrast with the Commission’s risk specification, Frontex’s specific risk indicators are not established in an accessible legislative act. Instead, the ETIAS Regulation leaves much to the imagination, since from a technical perspective there are endless ways of designing this system. Attempts of the author and other academics (Derave et al., 2022) to obtain information from relevant agencies, such as Frontex and eu-LISA (the Agency responsible for the technical development of ETIAS and its technical management), aimed at clarifying how the screening rules algorithm will function, have not been successful. Access to relevant information and documents was refused on the grounds of the protection of the ongoing decision-making process (Article 4(3) Regulation 1049/2001) and the protection of public interest as regards public security, a ground for refusal which according to Regulation 1049/2001 cannot be balanced against other overriding public interests (Article 4(1)(a) Regulation 1049/2001). This gives an indication of the low level of access to information and documents on the technical design of ETIAS to be expected once ETIAS is in operation. In turn, this leads to a lack of transparency towards the legal academic community and the public at large. This is problematic since information on technical design is important to evaluate a system’s rule of law and fundamental rights compliance. The CJEU stated in Ligue des droits humains (par. 194) that processing PNR data against pre-determined criteria precludes the use of “artificial intelligence technology in self-learning systems (‘machine learning’), capable of modifying without human intervention or review the assessment process”. However, Derave et al. (2022) have convincingly argued that machine learning techniques are likely to be used in ETIAS.

‘Algorithmic Discretion’ and the Principle of Legality

The Rule of Law – a cornerstone of liberal democracy and the EU legal order – has “concrete expression in principles containing legally binding obligations”. At first glance, the screening rules algorithm raises concerns in relation to one of these principles, namely: the principle of legality. The latter has two aspects within a European constitutional context: formal and substantive legality. The classic formal understanding of legality is a restriction of the exercise of power by law, which requires that the exercise of power is based in law and that powers are defined by law. Substantive legality also entails substantive requirements of the quality and content of the law and its application in practice. These regard particularly foreseeability, clarity, limits on discretion, and accessibility. Substantive legality is, therefore, closely connected to the principle of legal certainty.

The ETIAS screening rules algorithm illustrates how automation can lead to what I suggest is a new form of inherent arbitrariness, which I refer to as ‘algorithmic discretion’. It is problematic that, by reading the ETIAS Regulation, one cannot foresee how the system will be applied in individual cases. The rules defining how ETIAS will perform when operating at the individual decision-making level are not specified in the written law (the ETIAS Regulation), but are instead transferred to the algorithm, which can be compared to a form of ‘unwritten law’ or ‘invisible law’ (cf. Hildebrandt, 2016, p. 10). In other words, how ETIAS will function largely depends on factors outside of written law, such as how the specific risk indicators are established by Frontex. This will eventually have an impact on the individual decision-making level. From a perspective of legality, even if there are legitimate reasons for vagueness in the law and a degree of unforeseeability, the law must still be sufficiently precise to rule out any arbitrary exercise of power. In the case of the ETIAS screening rules algorithm, the exercise of power and discretion and their limitations are not sufficiently specified at the legislative level, but are delegated to an algorithm instead, giving it what could be referred to as ‘algorithmic discretion’.

Institutionalising Trust in Statistical Correlations

Traditional legal decision-making (carried out by humans) is based on deductive reasoning and subsumption. This means combining the facts at hand with the relevant legal norm and drawing a conclusion – basically “if this, then that”. Such reasoning is based on certainty and causality (see Bayamlıoğlu & Leenes, 2018). Instead, ETIAS represents an institutionalisation of trust in statistical correlations based on probability and inductive reasoning towards likely conclusions as a basis for decision-making. This cements the techno-solutionist assumption that we trust statistical correlations to represent reality and that they are capable of individual assessment. As a previous debate on Verfassungsblog has indicated, it is not so controversial to speak about “the Rule of Law versus the Rule of the Algorithm”. In light of this shift and the rule of law challenges associated with it, it is noteworthy that ETIAS is excluded from the scope of the proposed AI Act (Article 83), in particular since the proposal classifies border control management as a high-risk area (Recital 39).

Conclusion

Automation is certainly not negative per se, and human decision-making has its own challenges in relation to discretion, such as subjectivity, bias, discrimination and explainability. However, the screening rules algorithm approach to border control is new and rests on a completely different logic compared to traditional human decision-making. In a liberal democracy based on the rule of law, there are well-established democratically enacted legal structures to deal with flaws in human decision-making. Similar structures remain to be established when it comes to the use of algorithms, reliance on statistical correlation and probability over certainty and causality, and matters of algorithmic discretion. This is particularly important when implementing unprecedented automated systems, such as ETIAS, in order to avoid undermining the principle of legality and other rule of law principles.

Part I has discussed rule of law challenges related to the principle of legality and algorithmic discretion. Part II will show how further down the line other rule of law principles can be at stake.

See Frontex and ‘Algorithmic Discretion’ – Part II: Three Accountability Issues.