Overcoming Big Tech AI Merger Evasions: Innovating EU Competition Law through the AI Act

Big Tech is, perhaps, too big; and ever more so. Companies like Google and Microsoft evade the EU Merger Regulation by entering partnerships with smaller AI labs that fall short of shifting ownership but nevertheless increase the monopolistic power of Big Tech. These quasi-mergers are particularly problematic in the context of generative AI, which relies even more than many other services on incredibly vast computing power. To illustrate the relevance of this phenomenon, Microsoft alone finished two cooperation agreements in the last month: one with the French startup Mistral and one with the startup G42 from Abu Dhabi. By their structural design, most large AI models need humongous amounts of data and computing power to train their models. In simple terms, dominance in other markets cause an unfair advantage compared to startups by the huge amount of capital necessary to buy the relevant chips, and the huge amount of proprietary data the social media platforms in question can uniquely access. That is a dire state from an economic as well as a more fundamental and democratic perspective, as concentrating economic might in the hands of very few companies may cause problems down the road.

Therefore, we argue that the EU Commission should adjust its merger rules in the age of AI by strengthening competitional oversight of providers of ‘AI models with systemic risks’. Concretely, the Commission should include the classifications passed in the AI Act, especially that of ‘high-risk systems’ into its antitrust considerations. What sounds technical is actually rather simple. Trying to incorporate the AI Act’s classifications in competition law would ensure that this capital-intensive industry receives the competition oversight it deserves and competition authorities can also partake in protecting the fundamental rights of EU citizens from systemic risks. Providers of large general-purpose AI models with systemic risk can be presumed to be large enough in investigations of (quasi-)mergers. This would increase the Commission’s ability to effectively tackle the concentration of power in the AI supply chain. Currently, the Merger Regulation doesn’t apply to fast-growing and highly-valued startups such as Mistral. Additionally, in order to effectively monitor and intervene in market developments in the AI supply chain, the Commission must evaluate other key aspects besides share-holding and turnover – the compute threshold for AI systems in art 52(a) of the AI Act is one such aspect.

The prime example for the necessity of these measures is Microsoft and its quasi-mergers. The company has invested 13 billion dollars into the AI Lab OpenAI, which translates into a 49% ownership stake, and recently, Microsoft entered a strategic partnership with the French AI startup Mistral. The most significant aspect of the deal between Mistral and Microsoft is that the former gets access to Microsoft’s cloud computing and its customers. In contrast, Microsoft gets an undisclosed amount of equity in the next funding round and the integration of Mistral into its Azure platform. This is a way to bundle AI applications on Microsoft’s platform.

This news sparked outrage amongst policymakers in Brussels and Paris as Mistral played a central role in the fierce lobbying during the dramatic finalization of the AI Act. From a Competition law perspective, such vertical outsourcing (intentionally) evades the Merger Regulation. The Commission has demonstrated the ambition to address these partnerships – the EU Competition Authority promptly extended its Microsoft-OpenAI investigation to include Mistral and inquired into the impacts of AI on elections under the Digital Services Act. But, there is arguably a need for new tools in the EU Competition Law toolkit.

Mistral: the promising European AI lab caught in a highly concentrated AI supply chain

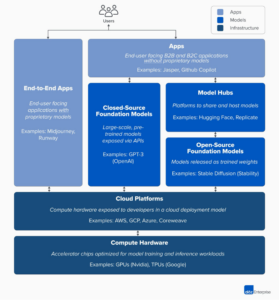

From an economic perspective, cooperation with Microsoft is necessary for Mistral due to market pressures that favor buy-ups across the AI supply chain. Figure 1 illustrates the AI supply chain: from the production of compute hardware, like AI chips, and the training of training models on specialized cloud platforms (Infrastructure); to access points to the models, for example through APIs or model hubs (Models); to the applications that make use of the models (Apps). Many of the markets in the AI supply chain are highly concentrated.

Figure 1: The AI Supply Chain, borrowed from Bornstein et al

For instance, the Big Tech oligopoly of Amazon Web Services, Google Cloud, and Microsoft Azure collectively control 67% of the cloud market. Microsoft Azure alone controls 24% of the cloud computing market, and investors would value it at roughly $1 trillion in market capitalization. The near-monopolies of Nvidia as AI chip designer and TSMC as chip producer do not help AI startups either.

Societal harms of a monopolistic AI supply chain

Tech monopolies have already created a range of social and economic harms – harms that AI will supercharge, according to a report from the Open Market Institute. One such harm is the suppression of trustworthy information. State-level actors leverage this (think Russian influence in the US 2016 elections and Brexit) as well as private actors (think Taylor Swift deep-fake), and generative AI increases the ability of actors to personalize propaganda and misinformation campaigns at an unprecedented scale (a threat the Commission is attending to). A third harm is reduced security and resilience, for example, if critical infrastructure such as energy and health systems rely on few central AI systems. This creates threats from systems failures, cyberattacks, rogue algorithms, and faulty data, which would all have essential systemic implications.

The EU Merger Regulation does not cover Mistral’s partnership with Microsoft as it currently stands. The first issue with the Merger Regulation is that it only applies to companies with a turnover of at least 250 million Euro in the preceding year, which is clearly not the case for the fast-growing startup Mistral founded only in April 2023 (art 1(2) & 5(1) Merger Regulation). The second issue with the Regulation is that even if the criteria for application were to be fulfilled, that type of cooperation is unlikely to satisfy the “decisive influence” doctrine under article 3(2) of the Merger Regulation. This doctrine typically requires the acquisition of majority ownership.

The partnerships Microsoft is engaging in avoids this criteria. In Mistral’s case, the company is relying on exclusive deals about compute and user access. In the recent case of the AI start-up Inflection, Microsoft took over central talent (leaders and employees) from Inflection, which announced it would stop improving its model. This is essentially a killer acquisition without any formal acquisition, and it falls outside the application of the Merger Regulation.

Big Tech’s practices of bundling capital, compute, and application across the AI supply chain is problematic. For example, the vertical integration creates barriers to entry in the application market. Big Tech can leverage their position in the cloud market to get unique access to cutting-edge AI models. This undermines competition on the application market. The Commission has recognized the acquisitions of non-controlling minority shareholdings may be subject to merger control rules, so there is potential for sharpening the existing tool through interpretation. However, we think it is necessary to expand the EU Merger regime to prevent anti-competitive effects from taking place in the AI supply chain and society more broadly.

Integrating classifications from the AI Act into the EU competition law regime

First, the categorization of so-called ‘AI models with systemic risks’ should be included in EU competition law analysis. ‘AI models with systemic risks’ is a category of AI systems defined under the AI Act as general-purpose AI models that have high-impact capabilities. The threshold for high-impact capabilities is set based on a technical computational threshold, namely when more than 10^25 Floating Point Operations (a measure of computing power) is used for training a model (art 52(a) AI Act final draft). The Commission is empowered to adopt this threshold through delegated acts, which allows a future-proof way of regulating emerging technologies.

‘AI models with systemic risks’ are enormously powerful systems. Providers of such systems will be very powerful, regardless of their turnover or otherwise. Further, there is a frantic race between companies to reap the first-mover benefits and establish dominance as the leading provider of such systems. One way to integrate the systemic risks threshold into competition law would be to require prior approval of actions that have the potential to constitute bundling or exclusivity by providers of AI models with systemic risks. Such actions could include changes in equity (below majority thresholds) and exclusive agreements on the provision of cloud computing or cloud provision and integration into applications (bundling). Another approach would be to require structural separation, prohibiting companies from controlling both GPAI models and other technologies/platforms that enable engagement in unfair and abusive practices. Such separation should account for both vertical and horizontal control. Control should be understood more broadly than solely constituting majority shares. Structural separation could build on already existing concepts within EU competition law like bundling and exclusivity deals.

Second, in order to effectively monitor and intervene in market developments in the AI supply chain, the Commission must evaluate other key aspects besides share-holding and turnover. The compute threshold in art 52(a) of the AI Act is one such aspect. This would allow the Commission to better account for the AI supply chain in its competition law enforcement.

While these suggestions are preliminary ideas intended to spark further thinking rather than constituting complete proposals, we are convinced that there is great potential in the interaction between the AI Act and the EU Competition law regime. The AI Act itself includes several points of interaction between EU competition law and the AI Act. For example, article 58 outlines the tasks of the AI Board, which include cooperating with other Union institutions, bodies, offices, and agencies, particularly in the field of competition.

It is also relevant to note the case law development of increased interaction between competition law and data protection law. In the Facebook Germany case, the European Court of Justice ruled that breaches of the GDPR are relevant for abuse of dominant positions and may be taken into account by competition authorities in their assessment. The Court of Justice seems supportive of incorporating different EU law regimes that are relevant to digital markets in the enforcement of EU competition law. The Commission should utilize this support and use the compute thresholds in the AI Act in the EU competition law regime. This would be an essential step in updating the competition toolbox to be fit for the rapidly evolving AI supply chain.

Thanks for this thoughtful comment. I agree with a lot of this.

However, I am not sure how reading the concept of systemic risk across from the AI Act works: I don’t see how the notion of systemic risk is a proxy for market power.

To elaborate: in competition law one is worried about two firms cooperating when together they have market power to such a degree that this is harmful to competition. This harm can occur when the firms are providing a systemic risk AI system or a lower-risk one. The problem is not the degree of risk but whether they can monopolize that AI service (eg by bundling it into their ecosystem)

In the AI Act instead, systemic risk pertains not to the market power of the provider but to certain kinds of risk. Theoretically a firm could have a 10% share of the market in a particular AI service and still be labelled as posing a systemic risk (as defined eg in recital 60 of the AI act) but this firm raises no competition law problems.

Dear Professor Monti,

Thank you for your insightful comment! You are absolutely right that the concepts of systemic risk within the AI Act and market power in competition law are distinct. We apologize if our initial phrasing conflated the two.

However, we still believe there’s value in considering the AI Act’s classifications as a signal for potential market power issues – even if it’s an admittedly imperfect proxy.

First, model size matters. The 10^25 FLOPs compute threshold for “high-risk” systems indicates the sheer scale of these models. Companies capable of developing such models likely possess significant resources and market influence, even if traditional market share metrics at the time don’t suggest immediate dominance. Therefore, model size is an early indicator of size, in contrast to revenues. While immediate market power may still be minimal, monitoring these firms can help antitrust authorities preemptively assess long-term implications.

We understand your emphasis on market power under competition law. Our argument also recognizes the increase in systemic risks posed by ‘quasi-mergers’ involving Big Tech and AI startups, especially those developing GPAI models. Here’s where the focus on systemic risks to e.g., fundamental rights becomes critical:

First, the potential to combine diverse datasets from multiple Big Tech platforms creates significant risks to fundamental rights, regardless of traditional market power analysis. The potential for data misuse amplifies any harms from the AI model itself.

Second, Integrating AI models with existing products without proper oversight exposes users, especially vulnerable groups like children, to AI-generated biases and harms on a massive scale. Consider Facebook’s automatic integration of Llama-3 into WhatsApp, Facebook, and Instagram, which could expose children to deepfakes not via a chatbot but on their main way of communication.

We wholeheartedly agree that refining conceptual links between competition law and the AI Act is crucial. We welcome your expertise in helping us develop more robust tools to address the unique challenges of the AI market.

Sincerely,

Tekla Emborg and Jacob Schaal